Tier-1 Canadian Telco — Digital Services Integration & Entitlements (Partner Façade + Governance)

Summary

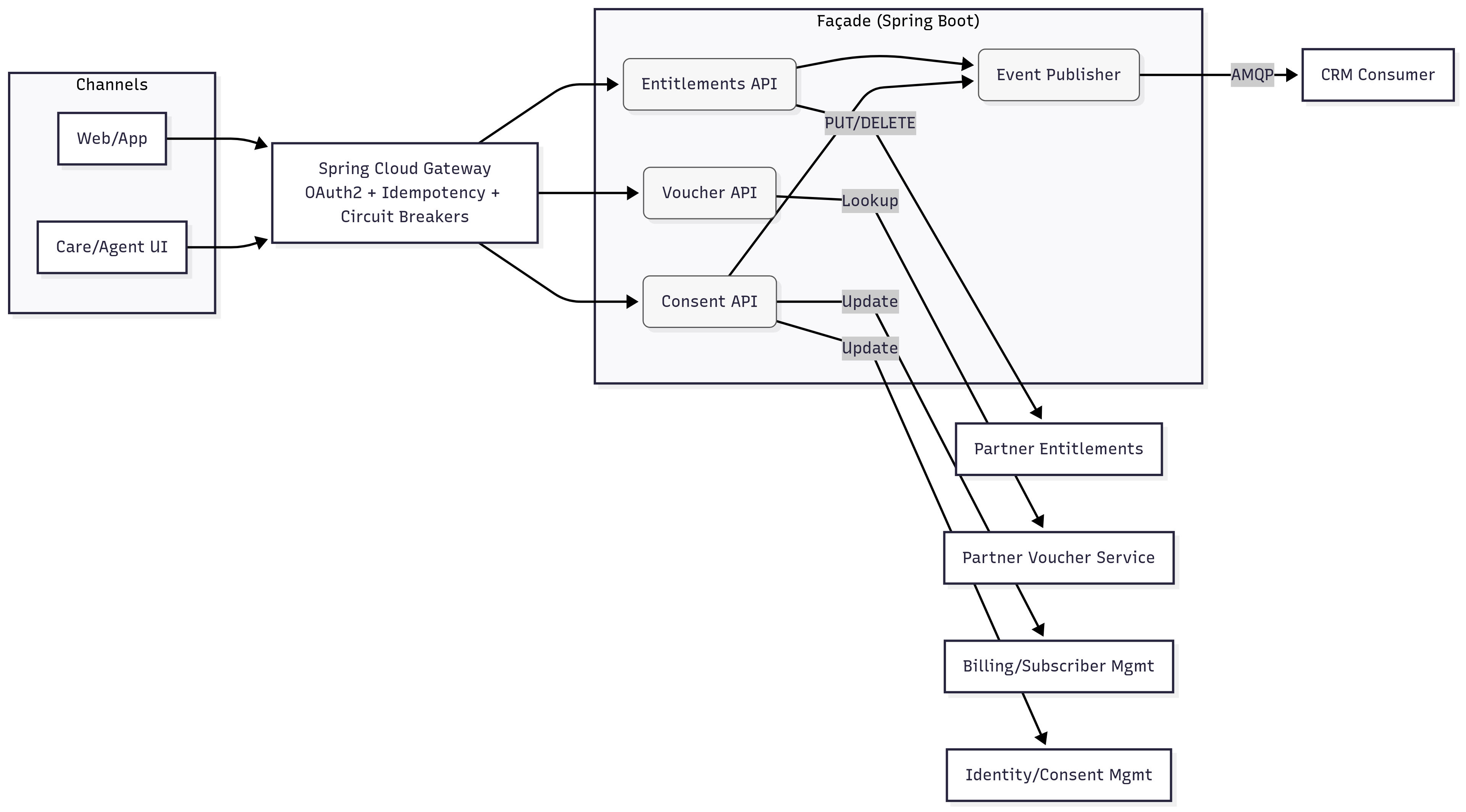

Built an API-led partner façade for digital services (streaming, sports, content), unifying entitlements, voucher lookups, and consents behind stable REST contracts with idempotency, retries, auditing, and observability. The façade fronts heterogeneous partner endpoints and returns consistent responses—cutting activation failures and billing handoff issues.

Problem

- Each partner exposed different request/response shapes and error codes for entitlements, vouchers, and consent updates.

- Channels (web/app/care) lacked uniform SLAs, error semantics, and governed lifecycles; small backend changes caused front-end regressions.

- CRM updates tightly coupled to activation paths caused timeouts and user-visible failures.

Solution Mechanics

Primary pattern: API-led orchestration (Java + Spring Boot).

-

Gateway & security

Spring Cloud Gateway with OAuth2/OIDC, idempotency keys, timeouts, and circuit breakers. -

Domain APIs (Spring Boot services)

- Entitlements API — Create/Update (PUT) and Delete entitlement records via a consistent resource model (

subscriberId,productCodes,brand,source,startAt,endAt,traceId). - Voucher API — Single lookup surface for voucher eligibility/details across partners (normalized fields like

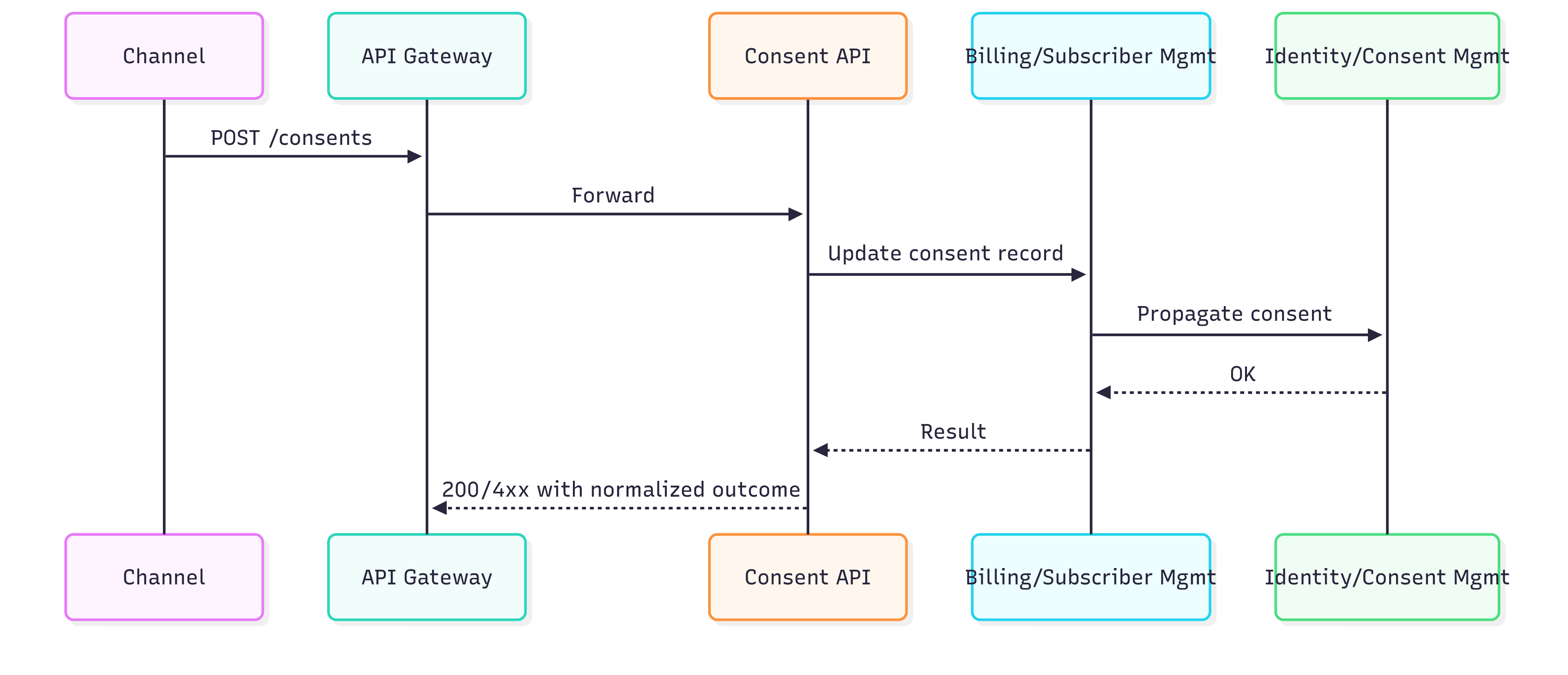

voucherId,code,status,redeemUrl). - Consent API — Accepts channel consent payloads and writes to authoritative systems (e.g., billing/subscriber & identity/consent systems) in one governed flow with auditable outcomes.

- Entitlements API — Create/Update (PUT) and Delete entitlement records via a consistent resource model (

-

Async side-effects

RabbitMQ (AMQP) publishes CRM association updates and other non-critical writes to decouple user flows from downstream latency. -

Data & state

Postgres (audit + minimal state), Redis (idempotency + short-term correlation), Micrometer → Grafana (SLOs, partner error/timeout rates). -

Governance

Versioned OpenAPI contracts, response/error normalization tables, partner adapters as replaceable modules, and environment-specific configuration.

Diagram 1 - Context Diagram — Partner façade

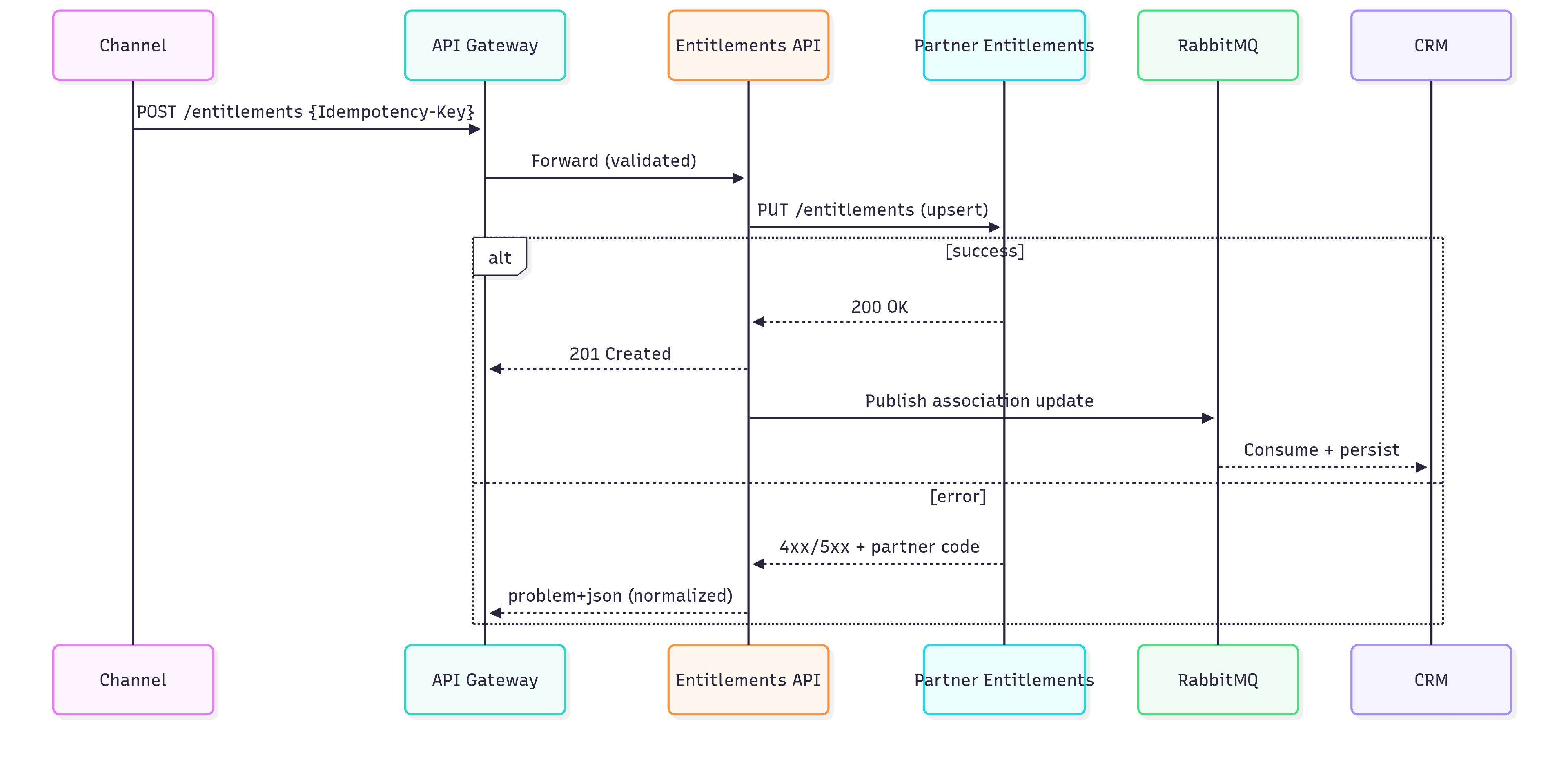

Diagram 2 - Sequence — Entitlement activation

Diagram 3 - Sequence — Consent update

Process Flow

- Channel calls the Entitlements API with an Idempotency-Key. Request is validated and traced end-to-end.

- Service invokes the Partner Entitlements endpoint (hidden behind an adapter) via HTTP PUT for upsert or HTTP DELETE for removal; partner responses are normalized to

application/problem+jsonon errors. - Voucher lookups hit the Voucher API, which queries the relevant Partner Voucher service and returns a consistent list of voucher items within a 2s p95 SLA (configurable).

- Consent updates go to the Consent API, which writes to Billing/Subscriber Management and Identity/Consent Management systems; both results are combined into a single, normalized outcome.

- On successful entitlement/consent changes, an event is published to RabbitMQ for CRM synchronization and other subscribers; retries and DLQs are handled at the messaging layer.

- Observability: Gateway and services emit p50/p95 latency, timeout/error rates per adapter, DLQ depth, and retry success; traceId and correlationId flow through logs and headers.

- Resilience: Per-adapter circuit breakers, exponential backoff, idempotent retries, and sane timeouts protect UX from partner instability.

- Change safety: Partner changes (URLs, payloads, auth) are absorbed by the adapter layer behind stable façade contracts.

Outcomes

- Activation success rate improved via idempotency and standardized error handling on entitlement PUT/DELETE. (Proxy; based on normalized error distributions and reduced ambiguous failures.)

- Cleaner billing handoffs by consolidating voucher/entitlement semantics into one façade with consistent SLAs. (Verified in pre-prod tests and staged rollouts.)

- Lower coupling to CRM: user flows no longer block on CRM writes—async sync with DLQ safeguards. (Modeled; validated via failure drills.)

Strategic Business Impact

- Fewer failed activations → fewer support calls (Proxy): standard errors + retries reduce escalations.

- Churn risk lowered during promotions (Modeled): consistent voucher eligibility & issuance responses prevent promo-day spikes.

- Faster partner launches (Proxy): adapters isolate partner API changes, avoiding channel rework.

Method tags: Verified (observed in tests), Modeled (simulations/failure drills), Proxy (leading indicators: normalized error codes, DLQ stability).

Role & Scope

Owned architecture and delivery for gateway, Spring services, partner adapters, error/governance models, and run-time SLOs; aligned flows across channels and downstream platforms.

Key Decisions & Trade-offs

- API-led front door over direct channel→partner calls: stable contracts vs minimal initial effort.

- Adapter-per-partner isolates faults and simplifies upgrades vs a single generic connector.

- Async CRM sync preserves UX but introduces eventual consistency (covered by clear SLAs and reconciliation).

- Error normalization keeps partner-specific codes in telemetry while exposing a uniform client model.

- Strict timeouts/SLOs improve predictability but require capacity planning and back-pressure handling.

Risks & Mitigations

- Partner instability / timeouts → per-adapter circuit breakers, bulkheads, canned-fault test suites.

- Schema drift → contract tests and versioned adapters; change gates on payloads and auth.

- Queue backlog → DLQs, replay tooling, message TTLs aligned to business SLAs.

- Idempotency misuse → TTL-bounded keys, replay detection, and alerting on duplicate suppression.

Suggested Metrics (run-time SLOs)

- Entitlement write p95 and timeout rate by partner.

- Voucher lookup p95 and error rate.

- Consent success ratio and propagation latency to downstream systems.

- CRM sync lag, DLQ depth, retry success rate.

- Idempotency dedupe hit rate; distribution of problem+json error categories.

Closing principle

Stabilize at the façade, specialize at the edge. Wrap partner differences behind clean, governed APIs so channels never feel backend turbulence.